Tailscale Changelog Review 1 of ?

tl,dr;⌗

for folks using or building SaaS products that want an added level of security, App Connectors allow you to use IP restrictions, without resorting to tunneling all their users traffic over a VPN endpoint

What is it?⌗

App Connectors fuse Tailscale’s subnet routing and MagicDNS capabilities, allowing you to route by domain name instead of IP. In practice this allows a you to provide a domain name of a public SaaS, such as github.com, and the app connector becomes the relay for all traffic destined to that domain on your tailnet. With all the traffic to github.com servers flowing over an app connector node with a static IP, you can then enable advanced security features like GitHub’s organizational IP allowlist, ensuring only users actively on your tailnet can access the company GitHub repos and no one else, even if they have an employees credentials. Tailscale’s announcement blog post goes more in depth about the magic behind the feature.

Previously those web application firewall (WAF) features wouldn’t be practical for a small or remote company to use, because you would need to deploy a VPN and essentially bottleneck all employees traffic through it before they could access the service. This creates significant logistical and performance issues that larger companies with bigger IT teams and resources can address, and even then those experiences still aren’t great.

Tailscale’s approach of only routing the traffic destined to the SaaS and only opening wireguard tunnels when a clients traffic demands it makes it significantly simpler and less disruptive to endusers. Your clients devices may have Tailscale running all the time but they only have active wireguard sessions when their actions require it. A developer’s Zoom meeting is uninterupted as they perform a git clone from GitHub, even though behind the scenes as soon as the git session initiates it opens a wireguard tunnel to the app connector and all traffic flows over that encrypted tunnel.

In the traditional bottleneck approach, the same developer might have had to pick between being on Zoom or accessing GitHub, something that can likely be worked around but the same couldn’t be said of a sales person picking between Zoom and accessing Salesforce (which also has the same WAF features). That’s where the value of Tailscale’s solution really shows itself - you can extend an additional layer of security to everyone in the organization without subjecting them to some complex VPN service that requires extensive enduser management and adjustments to their workflow.

Trying it out⌗

I’ve gone through the docs try deploying an App Connector myself. You can follow along below to try it for yourself, as I’ve streamlined it a little bit. For a SaaS to use, I’m targeting icanhazip service, because it illustrate the point of which IP traffic is coming from, and it supports IPv6, so I have more than one IP address as my disposal to show the difference.

Update Tailscale Policy, adding a tag for the connector:

"tagOwners": {

...

"tag:icanhazip": ["autogroup:admin"],

....

}

Grant the tag permissions update any routes it finds automatically (best practices are to remove this once routes are discovered):

"autoApprovers": {

"routes": {

"0.0.0.0/0": ["tag:icanhazip"],

"::/0": ["tag:icanhazip"],

},

},

This section might already be present if exit-nodes were enabled already in the ACLs, but ensure this rule exists. If it doesn’t, the App Connectors will show as healthy but nodes won’t be able to route public destined traffic to them, so will appear to be broken:

"acls": [

{

"action": "accept",

"src": ["autogroup:member"],

"dst": ["autogroup:internet:*"],

},

],

Define the App Connector here in the policy file instead of doing it via the web UI. The name is what shows up in the UI and logs, the connectors are the tags used with the node running the app-connector service (so were the traffic should egress from), and the domains are the destination service this connector should show. For more details check the docs for the UI version.

"nodeAttrs": [

{

"target": ["*"],

"app": {"tailscale.com/app-connectors":

[

{

"name": "icanhazip",

"connectors": ["tag:icanhazip"],

"domains": ["icanhazip.com", "*.icanhazip.com"],

}

]

}

}

]

Deploying the app connector requires IP masquerading is enabled on the host, just like an exit node:

echo 'net.ipv4.ip_forward = 1' | sudo tee -a /etc/sysctl.d/99-tailscale.conf

echo 'net.ipv6.conf.all.forwarding = 1' | sudo tee -a /etc/sysctl.d/99-tailscale.conf

sudo sysctl -p /etc/sysctl.d/99-tailscale.conf

# Also on bookworm/raspberry pi os

# https://tailscale.com/kb/1320/performance-best-practices#ethtool-configuration

sudo nmcli con modify "Wired connection 1" ethtool.feature-rx-udp-gro-forwarding on ethtool.feature-rx-gro-list off

Once the above changes are made, install and start tailscale, login to authenticate the node:

curl -fsSL https://tailscale.com/install.sh | sh

sudo tailscale up --advertise-connector --advertise-tags=tag:icanhazip

To authenticate, visit:

https://login.tailscale.com/a/XXXXXXXXXX

Success.

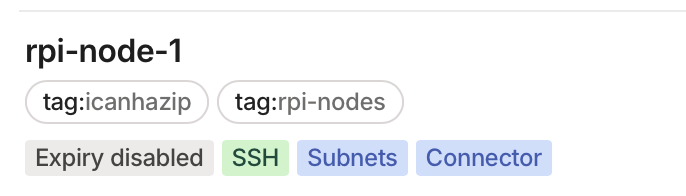

There is now a new machine on the Tailnet, this one showing it’s role as a Connector:

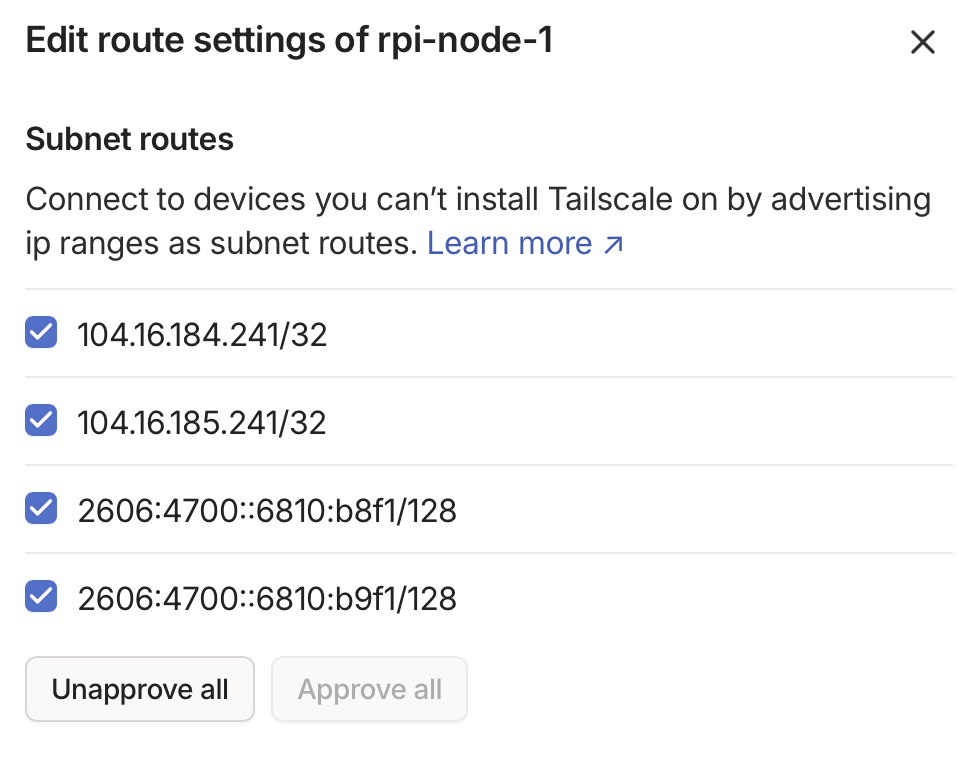

From another machine on the Tailnet, load ipv6.icanhazip.com & ipv4.icanhazip.com. On first load these machine will not have traffic routed over the App Connector, as it is learning the IPs and updating the route tables. Once both pages have loaded, checking the Route settings for the App Connector will reveal the discovered routes:

Some examples testing the connectivity:

# testing from the app connector

curl ipv6.icanhazip.com

XXXX:XXXX:XXXX:10:af62:d78f:53d8:457d

# testing from a laptop with tailscale off

curl -6 https://ipv6.icanhazip.com

XXXX:XXXX:XXXX:32:7d3a:3c2d:1850:1645

# testing from a laptop with tailscale on, IP matches app connector

curl -6 https://ipv6.icanhazip.com

XXXX:XXXX:XXXX:10:af62:d78f:53d8:457d

# traceroute showing the path through the app connector

traceroute ipv4.icanhazip.com

traceroute: Warning: ipv4.icanhazip.com has multiple addresses; using 104.16.185.241

traceroute to ipv4.icanhazip.com (104.16.185.241), 64 hops max, 40 byte packets

1 rpi-node-1.duckbill-mongoose.ts.net (100.111.22.139) 98.648 ms 3.072 ms 2.637 ms

2 * * *

3 * * *

4 * * *

5 * * *

6 * * *

7 * * *

8 104.16.185.241 (104.16.185.241) 10.943 ms 10.585 ms 9.774 ms

Impressions⌗

This is a really impressive feature and something I could see being useful for any business wanting to increase their users security. The fact that this happens at the network layer and doesn’t require any enduser intervention besides “signing in tailscale” on their device to be useable is what really sells this for me. I’ve seen tools like StrongDM and others that try to provide a similar way to relay traffic through a set of known IPs before going to a SaaS but they usually require more indepth enduser setup, relying on management tools to install a proxy in their browser, a menu bar app to provide alternative URLs to access a portal. They got the job done, but required a lot of handholding and individual troubleshooting that Tailscale’s approach just avoids. If you’re on the tailnet with the App-Connector, traffic for those domains products goes over tailnet to those services, via the App Connector, that’s it. It is hard to overstate how important enderuser ease of use is for the security of a company - if it’s complicated or difficult they’ll find ways to avoid it or work around it. This being simple to deploy with little end user device interaction besides “have tailnet installed and running” makes this a feature that is ten times more likely to be adopted without issue and therefore actually provide security to the business as a whole.

This access just isn’t for big name SaaS products. If you’re a SaaS business yourself or have other public resources that you’d want to restrict access to (such as your products admin page), these app-connectors could be deployed. They aren’t just for public facing services either, the domain lookup and route registration features can apply to a complex intranets or other internal networks where the idea of exposing an entire subnet creates more problems than it solves. Getting permission from the network or security team to deploy an app-connector will likely be easier than a subnet-router if only because one sounds more percise and task focused, while the other brings to mind granting extensive access, even though both are applying the same technology in slightly different approaches.

Related, I know for Gitpod customers who wanted to deploy Tailscale to access their on prem resources, getting permissions for a generic Subnet Router was very difficult. If instead they had the option for an App-Connector that would be configured to just expose the IPs associated with an internal services domain names, it would have required much less back and forth between security, network, and compliance teams.

One downside is you can’t write ACLs around who can access the app-connector inside the tailnet, so while this provides a step up in the level of security, there’s no way yet (I think) to integrate device postures into a rule stating that only recently patched MacOS machines can reach foo.com. Once you can allocate different nodes to different app-connectors, I can see this opening up some really creative use cases, especially for any SaaS platform that does customer data ingestion via workers. Previously they’d have to ask their customers to allow traffic from any of their possible workers IPs which could essentially be a NAT gateway in front of their entire kubernetes cluster. Now that the workers traffic to the customers platform or service can be routed (and logged) separately via app-connectors, minimizing the customers risk and not having to grant blanket internet access to the kubernetes cluster in the process.